Architecting a system that’s used 24/7 by millions of users all around the globe isn’t something most of us do every day. VSTS is one of those systems and seeing which decisions and changes they made to their system while moving from an on-premises product to a cloud solution is very interesting. In this post I’ll discuss some of the interesting aspects of VSTS and give you a look behind the scenes.

This is part 5 of a series on DevOps at Microsoft:

- Moving to Agile & the Cloud

- Planning and alignment when scaling Agile

- A day in the life of an Engineer

- Running the business on metrics

- The architecture of VSTS

- Changing the story around security

- How to shift quality left in a cloud and DevOps world

- Deploying in a continuous delivery and cloud world or how to deploy on Monday morning

- The end result

Moving to the cloud

When you have an on-premises application, you have a couple of options when moving to the cloud. You could start with refactoring your application to be more of a cloud service and then move it. You can also start with moving to the cloud and evolve your application on the go. The VSTS team choose for the second option. The biggest reason for this is that as long as you’re not in the cloud, you don’t know what you need. By moving fast, you get to learn valuable lessons that you would have gotten much later if you first starting refactoring your architecture.

When Microsoft started to move TFS to the cloud, TFS 2010 was just released. TFS 2010 ran on IIS, stored all data in SQL Server and integrated fully with Active Directory. The VSTS team picked up this application and moved it to Azure. This resulted in a single tenant system were each account got its own database in the Azure North Central (Chicago) region. At some point they were up to 11.000 databases! There was no telemetry yet and upgrades were done by taking the system fully offline. There also wasn’t a real deployment cadence yet. Deployments were scheduled depending on the features that were ready. And of course the team wasn’t used to supporting a cloud service. For example, engineers weren’t used to being on call and receiving calls in the middle of the night that something was wrong with the service.

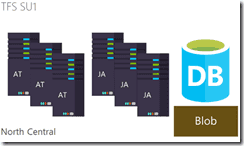

The basic architecture of VSTS was something like the following diagram:

Figure 1 TFS consisted of Application Tiers, Job Agents, databases and blob storage

Azure PaaS web and worker roles were used to run the application. Application tiers service the web UI and expose all the endpoints. Job Agents run background processes like cleaning up, processing check-ins and scheduling builds. The database runs on SQL Azure and stores only metadata. All the actual data, such as code files and work item attachments, are stored on Azure Storage. In the beginning, there was only one instance of VSTS so any update went immediately to that instance. There were no incremental rollout as we have today.

Becoming multi-tenant

In February 2012 the VSTS team made their database multi-tenant. This meant that instead of having a single database for each account, the accounts were merged into a single database. Because these tables became very big, partitions were added to support this. An interesting side note is that VSTS makes sure that each query includes a PartitionId value by pointing partition id zero to a none-existing file group. This means that every query that doesn’t have a PartitionId specified fails immediately. Now newly created accounts didn’t need to have their own database. Instead, VSTS could scale easily by adding accounts to their database and splitting the database in partitions.

Becoming multi instance

The next step on the journey of VSTS was to become multi instance. Until now, there was one instance of VSTS that ran in a single datacenter. This meant that there was a single point of failure for VSTS. It also meant that any updates to the system would immediately be available to all users, meaning that one bug could take out the whole system. Having VSTS in a single datacenter also was problematic when it came to legal regulations requiring some companies to keep their data in a specific location.

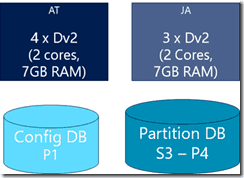

In 2013 the second instance came alive: SU0. A Scale Unit (SU) is a set of application tiers, job agents and databases. The following figure shows the default hardware requirements for a scale unit.

Figure 2 A scale unit has application tiers, job agents and databases

The statistics for VSTS (as of September 2017) are impressive:

- 31 services

- 15 regions

- 192 scale units (TFS has 17)

- 7,000 PaaS CPU Cores (TFS 1,110)

- 659 SQL Azure DBs (426 Standard, 233 Premium)

- 63 TB of DBs

- 1.3M accounts in largest SU

In the blog post on deployment strategies I’ll cover how scale units and rings allow VSTS to do gradual deployments.

Building for on-premises and cloud

VSTS and TFS are in the basis the same product but of course there are also differences. By using a clever architecture based on plug-ins for components like authentication, identity and storage and using a shared server framework, the team has achieved around 90% code reuse between TFS and VSTS.

The shared server framework contains a lot of components that are shared between all the parts of VSTS. This helps the team in avoiding duplicate code and making sure that the quality of foundational components is as high as possible. The following list gives you a rough idea of what the framework contains:

- SQL

- Storage

- Account host

- Identity

- Registry

- Upgrade

- Batch jobs

- Secrets

- Tracing

- Circuit breakers

- Throttling

- And more

Circuit breakers and throttling

Circuit breakers are particularly interesting and absolutely required in a cloud service. VSTS consists of many different services that all communicate with each other. One request from your end can result in multiple requests to dependent services. The end result is then shown to you as a user. If one of those dependencies has problems, all depending services will become slower, fail or just timeout. The circuit breaker pattern helps you with this. Instead of trying to reach a poorly performing service over and over again, you notice that the service is having problems and short circuit the calls to that service. This allows you to gracefully degrade your service without failing completely.

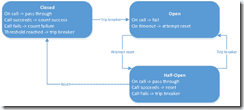

The following diagram depicts how a circuit breaker deals with requests, errors and periodic health checks. Normally, the circuit breaker is closed. Requests that come in pass through to the receiving service. If requests start to fail, the circuit breaker records the errors. If there are too many errors in a certain time window, the circuit breaker opens. Calls are now no longer passed on, instead a fallback action is executed. Once in a while, the open circuit breaker allows a call to go on to the depending service. If enough of those calls succeed, the circuit breaker resets itself and is now closed.

Figure 3 The different states of a circuit breaker

The following C# code snippet shows how such a circuit breaker can be configured:

internal static readonly CommandPropertiesSetter InstalledExtensionsSettings = new CommandPropertiesSetter()

// Amount of requests that need to be accumulated to decide whether we may need to open the circuit

.WithCircuitBreakerRequestVolumeThreshold(20)

// Percentage of failures that force opening circuit

.WithCircuitBreakerErrorThresholdPercentage(50)

// Minimum back-off interval. This is added to the retry interval computed from deltaBackoff.

.WithCircuitBreakerMinBackoff(TimeSpan.FromSeconds(0))

// Maximum back-off interval. MaximumBackoff is used if the computed retry interval is greater than MaxBackoff.

.WithCircuitBreakerMaxBackoff(TimeSpan.FromSeconds(30))

// Back-off interval between retries. Multiples of this timespan will be used for subsequent retry attempts.

.WithCircuitBreakerDeltaBackoff(TimeSpan.FromMilliseconds(300))

// If Run() execution time exceeds this limit the command is considered failed

.WithExecutionTimeout(TimeSpan.FromSeconds(10.0))

// Minimum amount of time at which CircuitBreaker calculates health

.WithMetricsHealthSnapshotInterval(TimeSpan.FromSeconds(0.5))

// Time window over which statistics are being computed

.WithMetricsRollingStatisticalWindowInMilliseconds(10000)

// Amount of buckets within the window

.WithMetricsRollingStatisticalWindowBuckets(10);

Having defined these settings, you can now use the circuit breaker like this:

var settings = CommandSetter.WithGroupKey(CommandServiceGroupKey.Framework)

.AndCommandKey("FetchInstalledExtensions")

.AndCommandPropertiesDefaults(InstalledExtensionsSettings);

var commandService = new CommandService<List<InstalledExtension>>(requestContext,

setter: settings,

run: () => FetchInstalledExtensions(requestContext),

fallback: () => FetchInstalledExtensionsFallback(requestContext));

extensions = commandService.Execute();

This code will call FetchInstalledExtensions as long as the circuit breaker is closed. When the circuit breaker is tripped because of errors, timeouts or other problems it will start calling FetchInstalledExtensionsFallback. While being tripped, it will sometimes execute the original command to see if things are getting better. If so, it will close itself and everything is back to normal.

An open circuit breaker is a cause for action. The fallback makes sure that there is no immediate problem but there is a reason why the circuit breaker opened. Having good monitoring around this allows you to investigate what the problems is.

If you want to know more about circuit breakers have a look at this page from Netflix, the place where this pattern originated: https://github.com/Netflix/Hystrix/wiki.

A circuit breaker is a bit of a blunt instrument. A circuit breaker makes the decision to sacrifice one thing for the good of the overall system. It does so by looking at the load across all users of the system. If this load increases to much and services start failing, a circuit breaker protects you. But what if a single user is generating a huge load? Tripping a circuit breaker would result in a degraded experience for all users. This problems is called the noisy neighbor issue and this is where throttling comes in. Whenever a user has to many concurrent requests to the service or uses to many resources within a specific period it’s time to throttle that user.

By tracking the amount of requests and resource utilization of a specific user, you can decide to delay the requests of that user to even completely block certain requests. A user is warned of throttling by sending specific HTTP headers:

- X-RateLimit-Delay – Duration the request was delayed, in ms

- X-RateLimit-Limit – Limit before throttling will occur

- X-RateLimit-Remaining – Remaining usage prior to the limit

- X-RateLimit-Reset – Seconds before usage is reset to 0

- Retry-After – Seconds to wait before retrying to avoid throttling

If throttling is not enough, a HTTP response code 429 is returned when a request is blocked. The combination of throttling and circuit breakers allows your cloud service to function under varying load while living in a world where servers go down and performance can differ.

Database strategies

Almost all features in VSTS depend on the database in one way or another. When building database code, the VSTS team designs the code with a couple of fundamentals in mind:

- Design for cloud-first, on-prem second

- Optimize for high performance, multi-tenancy, and online upgrades

- Database only accessed from trusted AT / JA

- All access through SQLResourceComponent and Stored Procedures

- Transaction managed in SQL code (not in mid-tier)

- Security is enforced on the middle tier

- Schemas used for separate subsystems

- PartitionID column used to partition tenants

Another feature of SQL Server that’s heavily used is SQL Server Extended Events (XEvents). XEvents allow you to track the resource consumption on your database. You can correlate data with specific user requests, jobs or background tasks. This helps you when investing performance issues or deciding when to throttle a user. You can find more information at https://technet.microsoft.com/en-us/library/bb630354.aspx.

Conclusion

I find the story of TFS becoming a cloud service very interesting. The way Microsoft took an on-premises product and just moved it to the cloud and then started learning what they need to change to the architecture and their deployment strategies may feel like trial and error but they learned a lot from it and moved really fast to a real clouds service.

That’s it for this part. In the next part I’ll go into security and the changes Microsoft made to culture and tooling to make sure that VSTS is a robust and safe cloud service.