Do you know why the daughter always chops off 1” from the steak before putting it in the pan? Because her mother did it. Do you know why the mother did it? Because the pan was too small.

We do the same in software development. Some things are so common and have been used for so many years that we no longer think about it. One of those things is DTAP.

DTAP stands for Development, Test, Acceptance, and Production. It’s a standard way of splitting up your environments that’s been around for decades.

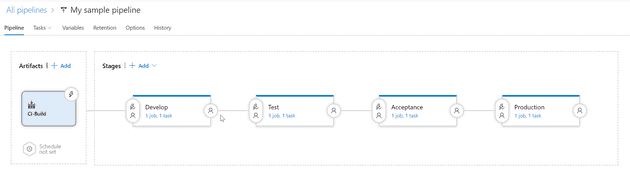

I visit a lot of customers for consulting on their DevOps pipeline. Almost all customers I visit have a pipeline that looks like this:

The DTAP principle is followed religiously and each and every pipeline has those exact environments. I’ve even heard someone say: ‘DevOps is automating your DTAP’. The horror!

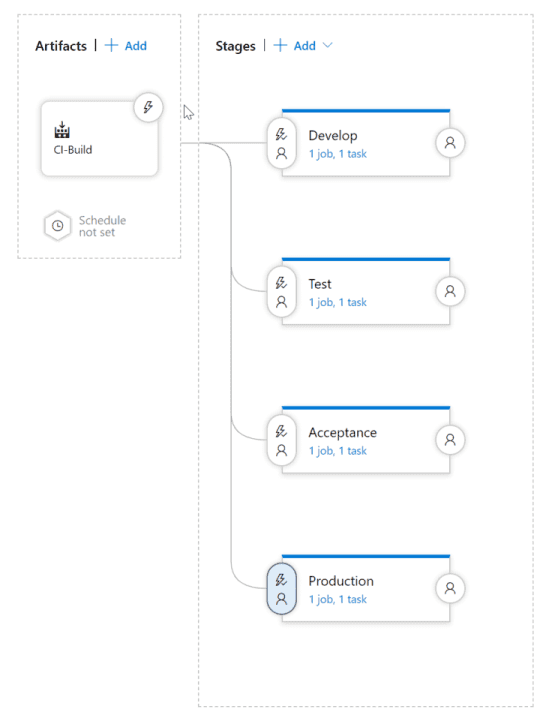

And let’s not even talk about this one:

If that’s your environment, please reach out on Twitter and let me help you.

Looking beyond DTAP

DTAP has its origins in an on-premises world. Requesting servers (physical or virtual) costs time and after that you pay a fixed amount of money. There are variations where some teams say that D(evelopment) is their own PC. Others add an extra environment for Education (training users) or Backup.

Fortunately, we now have the Cloud. It doesn’t matter if this is private, public or hybrid. As long as you can easily request and destroy environments, you can do whatever you want and add as many characters to DTAP as you want. And since you only pay for what you use, you shift from fixed costs upfront to a flexible model. This way you can save money, especially for environments that get destroyed once you’re finished. The automation of Cloud also helps you when it comes to speed. Creating an environment in the Cloud is way faster then the on-premises processes that most companies have to suffer through.

A simple step: parallel environments

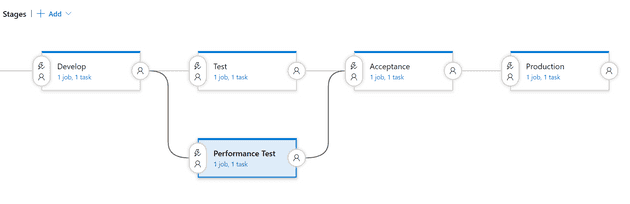

Having the ability to create environments on demand allows you to be more flexible in your release pipeline. Let’s start with something simple. If you want to run performance tests in parallel with some exploratory testing, just add some parallelization to your pipeline:

The Performance Test stage creates a new environment on demand, runs the load tests against it and then destroys the environment. Both Test and Performance Test have to succeed before continuing to Acceptance. If you add containers to the mix this becomes even easier. You can easily run multiple environments on a single Kubernetes cluster and remove them one you’re done.

Azure DevOps helps you already in calling each box a Stage, not an environment. Stages are flexible and not directly tied to any physical or virtual representation of your environments.

Progressive deployments with Feature flags

Another popular DevOps technique is using Feature Flags to turn features on or off at runtime. This can be a simple if-statement that checks a value in your configuration file or a more complex implementation that enables features based on user or location or a certain percentage of all traffic

Feature Flags allow you to work on a single branch of your code (avoiding merge work) and to run experiments by enabling functionality for a subset of your users and monitoring the usage, performance and errors to determine if you want to increase the usage or turn the feature off.

Testing in Production is an often heard term in this context. Instead of relying on a test or acceptance environment that doesn’t completely mirror production, you expose functionality to a small subset of users in the actual production environment. If something goes wrong, your (automatic) monitoring picks up on it and disables the feature. You can even run the backend code of your new feature but disable the frontend UI. That way, you can test all paths through the code but users won’t notice any degradation if you turn a feature off. Examples of this are the new timeline on Facebook or the new navigational UI in Azure DevOps.

With A/B testing you show two different versions of your application to different groups of users. You then monitor usage and use the data to decide which version of your application is the most successful. This can be small things like a textual change in your shopping card to a larger new feature like a new search approach. By monitoring the two (or more!) versions for statistically significant differences you can influence your backlog and the direction of your product. You can even ask users in product for their opinion (NPS is often used for this) to get even more data.

Launch Darkly is a great place to get started if you want to get started with feature flags.

Ring based deployments

Feature Flags offer fine grained control over feature availability but that’s only after the code has been deployed. Doing the actual deployment in phases is what ring based deployments are for. You split your users into groups and deploy your code to one group at a time. This means that your different groups of users end up on different environments, for example if you have multiple data centers. This is called a ring based deployment.

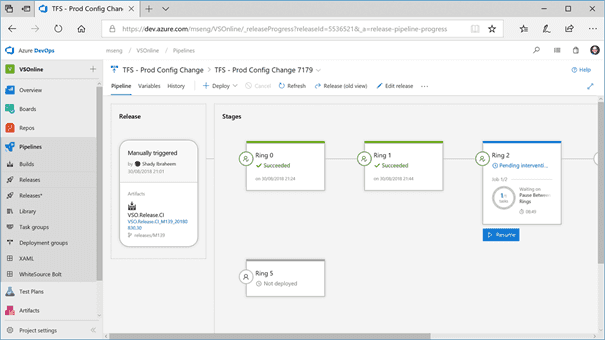

That’s the deployment model used by Azure DevOps Service. Being a cloud service that’s online 24/7 and deployed all over the world, the ring based deployment model allows the team to gradually rollout changes and monitor their success without affecting all users at once.

Ring 0 is where the team itself lives and where the Microsoft MVP’s have their account. Ring 0 is the first one to get new bits but also the one that could potentially break. Other rings expose new functionality to customers. Between each ring there is a wait period where the team monitors for problems. If the deployment isn’t cancelled, the next ring is deployed. This is why the release notes always show that the deployment will take a couple of weeks and it could be that new functionality isn’t visible in your organization yet.

Release Gates

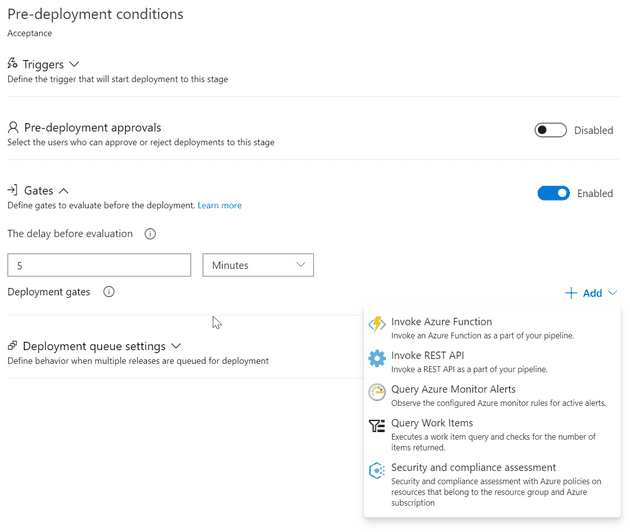

The monitoring between stages can be done with Release Gates. Release Gates sit between environments and monitor some external condition. As long as the condition is not met, the gate is closed. Once the gate opens, the release continues. Out of the box you get the following Release Gates in Azure DevOps:

Invoking an Azure Functions is the most extensible option. In your Azure Function you can run all the checks you want such as integrating with third party applications like ServiceNow. Once the Azure Function returns a successful result, the pipeline continues.

Conclusion

Traditional DTAP can still be useful but I find DTAP is too restrictive. If you can easily create and destroy environments, why not add stages on demand to speed up your pipeline? Combine that with feature flags, ring based deployments and release gates and your pipelines can be way more flexible then just a sequential DTAP pipeline.

So please, take a second look at your release pipeline and see if DTAP is really what you want or if there are better ways to deploy your application and make your users happy.