Every time I get a message that a platform is going to be down for maintenance somewhere during the night or weekend I cringe a little. I don’t envy the engineer that has to do that deployment. Yes, it’s true that deployments are hard. We all know the ‘it works on my machine’. But now that we live in a cloud world, customers expect applications that are available 24/7 and don’t have down time during updates. The biggest advantage? Deployments during working hours, preferably on Monday morning are becoming the new norm!

In this post I want to highlight some principles that will help you adopt to the new cloud world and continuous delivery practices. For background info and examples I’ll share details of the journey of the Microsoft Visual Studio Team Services team on their road to 24/7 up time and Monday morning deployments.

This is part 8 of a series on DevOps at Microsoft:

- Moving to Agile & the Cloud

- Planning and alignment when scaling Agile

- A day in the life of an Engineer

- Running the business on metrics

- The architecture of VSTS

- Changing the story around security

- How to shift quality left in a cloud and DevOps world

- Deploying in a continuous delivery and cloud world or how to deploy on Monday morning

- The end result

How not to deploy

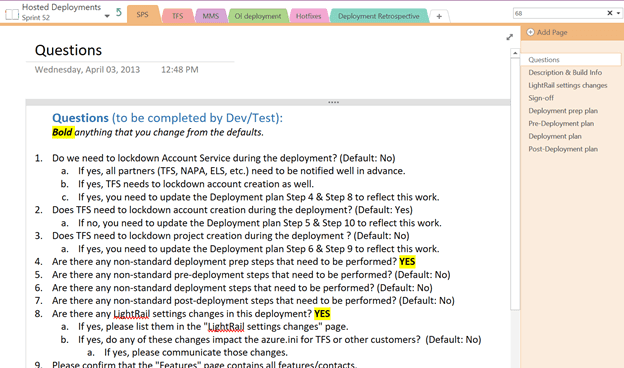

Today the VSTS team uses a mature deployment process but that’s not how it started. Take a look at the following screenshots to get a feeling of how Microsoft deployed VSTS in the beginning of the service.

Figure 1 Deployment recipes consisted of a long list of manual steps

Can you imagine how it feels to be the engineer responsible for following these steps to take a new deployment to production? We’ve all seen these lists of manual steps and it’s not hard to imagine how many things can go wrong.

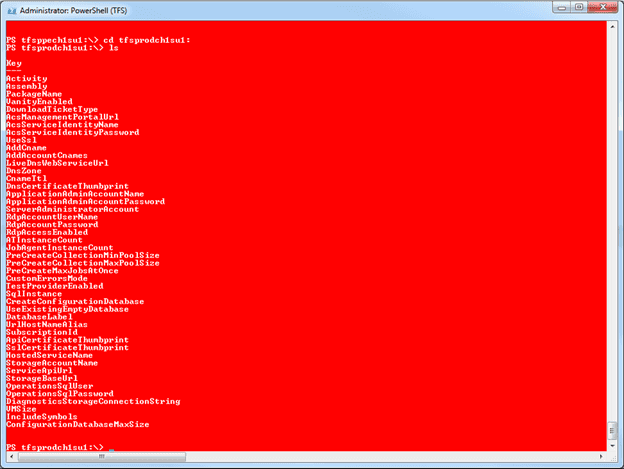

During a production deployment around 50 engineers joined a Skype call where they all could see the following PowerShell window. The red background meant that they where really working against production and should be careful of what they were doing.

Figure 2 Imagine being on a Skype call with 50 engineers all anxiously watching the deployment commands

What I see time and time again is that these manual deployments lead to the following chain reaction: manual steps lead to mistakes → mistakes are to be avoided → we deploy as little as possible to guarantee stability of the production system.

And there is the core of most deployment problems that companies experience. Deploying less often is not the solution to better deployments. On the contrary, deploying less often leads to bigger deployments. Bigger deployments have more moving parts and a larger chance of errors that will lead to more production errors.

If you want less problems when doing production deployments, do it more often. Make the deployments small and easy to understand and deploy them immediately instead of waiting for some magic moment in time where you deploy a whole bunch of changes.

If it hurts, do it more often If deployments hurt, don’t putt them off Do them more frequently

For more info see the article FrequenceReducesDifficulty by Martin Fowler that illustrates this concept.

Automate all the things

The first step that you absolutely have to take is to implement a good set of quality checks. Baking quality into your process is essential to be able to deploy with confidence. Have a good set of unit tests (see the blog post on testing for more details), use pull requests, automate code quality checks. Those are the basics that you should have in place. I try to run as much of these static analysis tests in my continuous integration builds. These CI builds are linked to pull requests and a branching strategy that disallows anyone to merge directly to master.

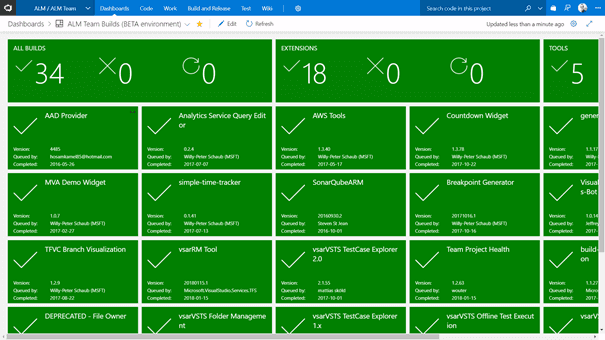

Make sure that the quality of your builds and releases is highly visible for everyone. This is one of the reasons why I build the Team Project Health extensions with the ALM Rangers. Your quality check is easy: make sure that you stay green. If things go wrong and red tiles show up, fix them. Make sure that your code is always in a healthy state.

Figure 3 This dashboard uses the Team Project Health extension to visualize the health of all your pipelines

With the basics in place, the next step is to get rid of manual deployments. Automate your deployment and make sure that no human intervention is required for a successful deployment. This has two effects. First, you avoid manual errors. Second, because the process is automated, you can do it as often as you want.

When automating your deployments, make sure to invest in creating a single deployment process that works locally on a developers machine and on test and production environments. Most of the time this means that you create a set of scripts that accept configuration data that allows them to run on all kinds of environments. Having a single way of deploying your application means that the script will be tested over and over. Much more then when you have a script that only runs during a production deployment. For example, the VSTS team has invested heavily in this and made sure that a developer can run a single script to deploy a complete VSTS environment locally on a developers machine to test new features.

The next step is deciding how you’re going to execute your scripts against test and production environments. VSTS Release Management is the best tool to help you with this. Release Management offers all the orchestration you need around your deployments. Release Management allows you to define environments, approvers, gates and more to fully orchestrate the deployment across all your environments while having full traceability.

Gates is a new feature that I want to highlight. Gates allow you to configure automated checks that decide if your deployment can continue. Checks can be things like running a query to make sure there are no open bugs, checking with an external product like ServiceNow or even a Twitter sentiment analysis. You can also set a time delay before your release proceeds to the next environment.

Ring deployments

A time delay is especially important when you introduce another concept to your deployments: rings. Instead of deploying your new feature to all of your data centers/servers/environments in one go, you split your environment in multiple rings and deploy them one by one. This mechanism is actively used by VSTS. VSTS is divided into multiple rings and deployments are released gradually over these rings. The following table details the ring structure of VSTS.

| Ring | Purpose | Customer type | Data center |

|---|---|---|---|

| 0 | Surface most of the customer-impacting bugs introduced by the deployment | Internal only, high tolerance for risk and bugs | US West Central |

| 1 | Surface bugs in areas that we do not dogfood | Customers using a breadth of the product, especially areas we do not dogfood (TFVC, hosted build, etc). Should be in a US time zone. | A small data center |

| 2 | Surface scale-related issues | Public accounts. Ideally free accounts, using a diverse set of the features available. | A medium to large US data center |

| 3 | Surface scale issues common in internal accounts and international related issues | Large internal accounts, European accounts | Internal data center and a European data center |

| 4 | Update the remaining scale units | Everyone else | All the rest |

Ring zero is where the VSTS team lives. This ring also hosts the account for the Microsoft ALM Rangers and for Microsoft ALM MVPs. This ring sees the most updates and acts as a canary instance. After each ring there is a bake time of at least 24 hours before the deployment proceeds to the next ring. This allows the team to catch bugs before they affect a lot of customers.

The following figure shows the VSTS ring structure in Release Management.

Figure 4 VSTS deploys across a set of rings to make deployments as safe as possible

Monitor

Your work isn’t done after the deployment finishes. To be able to function in a cloud and continuous delivery world you need to monitor your application in production. By integrating monitoring into Release Management you can even implement automated rollback when monitoring shows there is a problem. Application Insights is a great way to get started with monitoring your application.

There are a lot of things you can monitor for. The following table lists some of the performance counters that the VSTS team monitors to decide if a deployment can proceed or has to be rolled back. If the threshold is passed, an alert is issued, a snapshot of the environment state is taken and a rollback is executed.

| Counter | Threshold |

|---|---|

| ASP.NET v4.0.30319\Requests Queued | 25 |

| Logical Dis% Free Space | 10 |

| Memory\Available Mbytes | 256 |

| Processor(_Total)% Processor Time | 95% |

| TFS Services:Service Bus(_Total)\Client Notifications Average Send Time | 10s |

| TFS Services\Average SQL Connect Time | 3s |

| TFS Services\Active Application Service Hosts | 4000 |

Takeaways

Deciding where to start when moving to continuous delivery can be daunting. Try to use the following principles as your getting started point and evolve as you go. To summarize:

- Deploy often. If it hurts, do it more.

- Stay green throughout the sprint.

- Use consistent deployment tooling in dev, test and prod.

- Use Release Management to automate and orchestrate your deployment and enable the engineering team to do deployments.

- Break up your deployment in rings.

- Monitor your production environment and know when to rollback.

I know this is easier said then done. But the benefits of continuous delivery will make your engineers happy and your product better and is absolutely worth the investment. VSTS is the best tool to help you implement a complete delivery pipeline. So what’s stopping you?